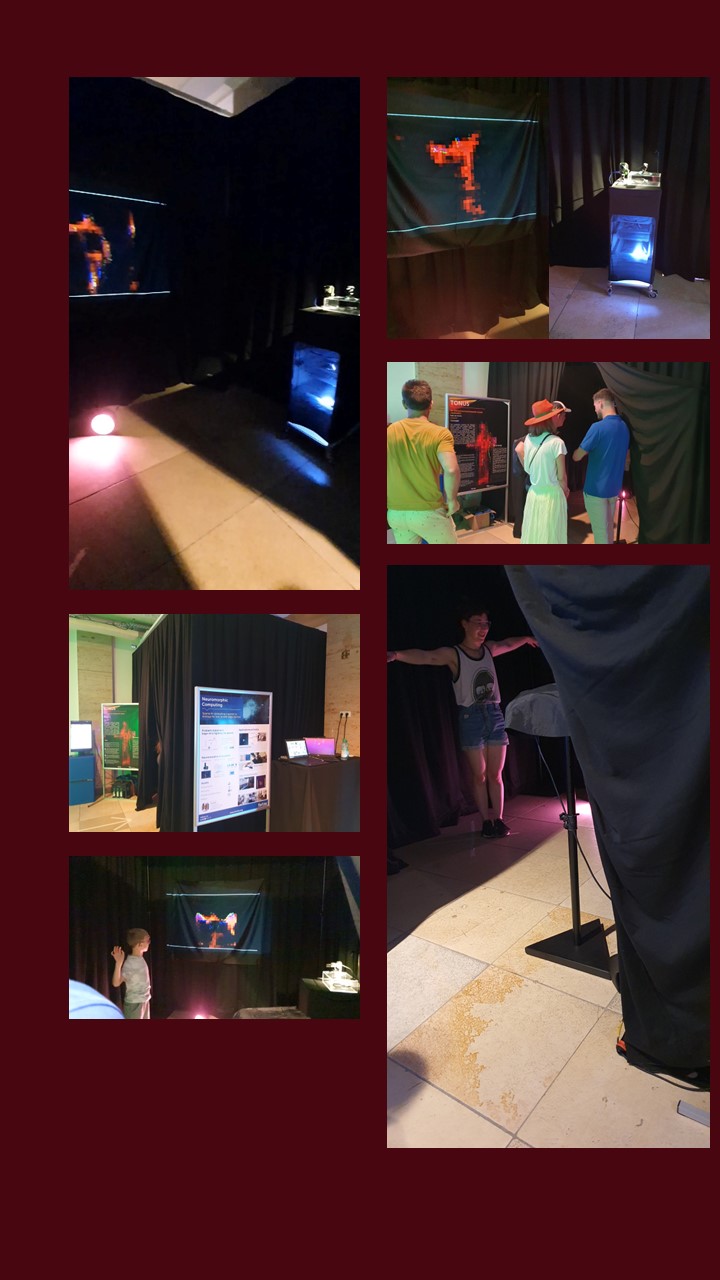

Interactive sound installation

TONUS

For the first time, motion tracking is performed using neuromorphic computing, an emerging AI technology inspired by the human brain, simulating the very details of biological neurons, in contrast to conventional AI. This makes the machine very similar to humans.

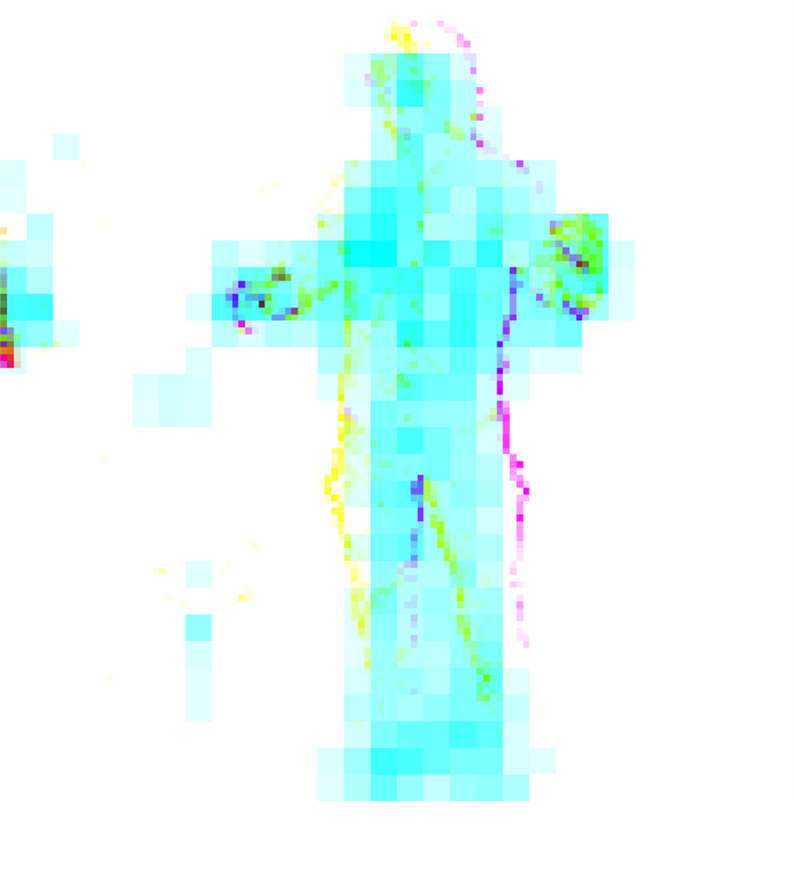

Neuromorphic event-based cameras transmit the signatures of movements rather than entire images. The person is de facto unrecognizable, only the traces of their movements are.

The network of spiking neurons runs on a neuromorphic chip that mimics a biological brain. Our goal is to confuse the visitor by showing this neural similarity between them and the machine, via sound and light feedback, by visually representing the activity of the machine's neurons in an abstract way and blurring the technological separation.

Another special feature of neuromorphic technology is its very low energy consumption. It is also referred to as "Green AI". The neuromorphic chip is presented in such a way that it takes center stage. Its small dimensions and low energy consumption embody the frugality of green artificial intelligence.

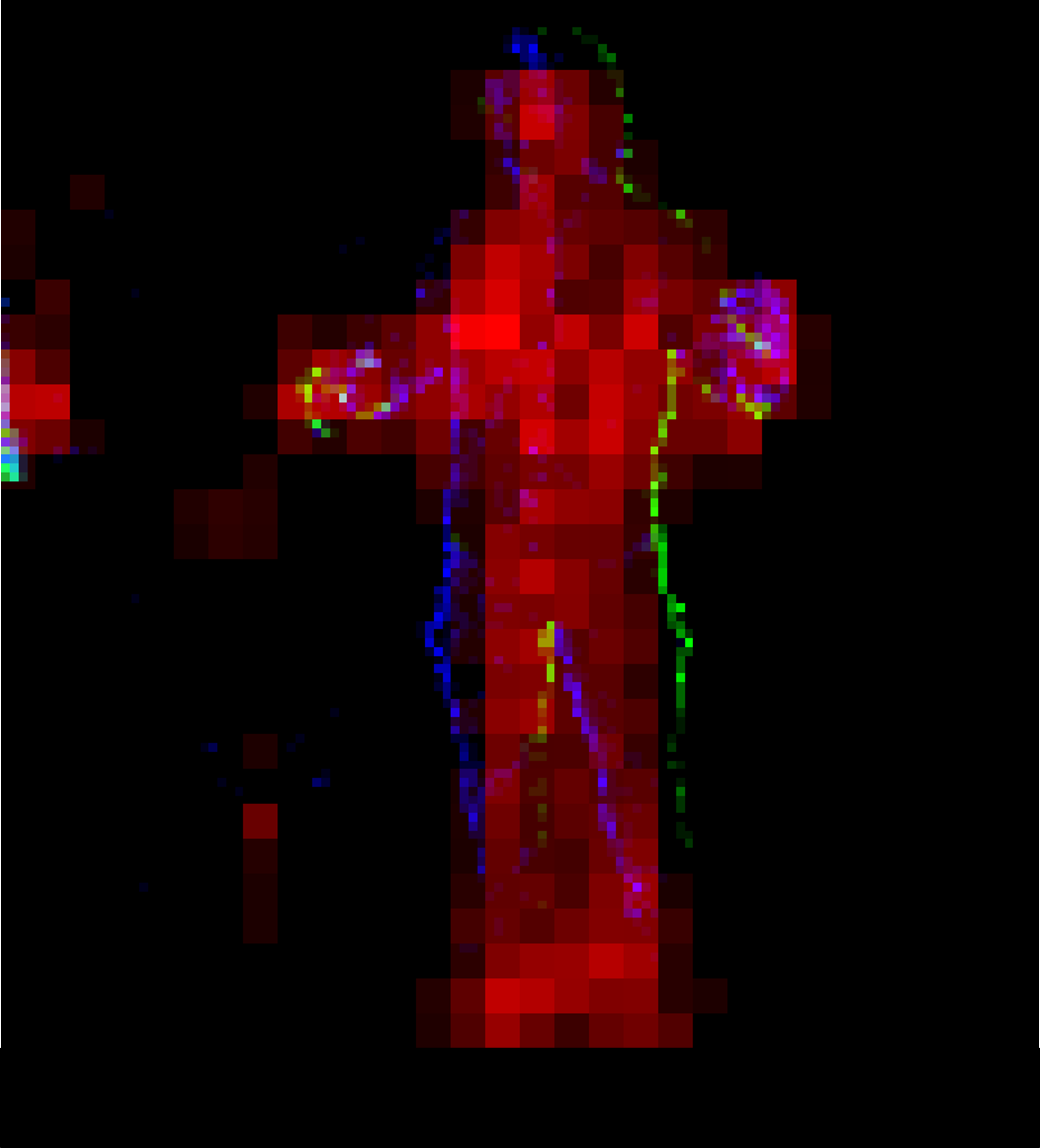

The projected visual feedback is the direct output of the camera (blue and green shape edges), plus the “heatmap” produced by the spiking CNN based neural network.

The LED installation reacts to the heatmap values of active joints, which also trigger sound clicks, enabling the visitor to hear and see neuron spiking activity.

We use pose estimation with a neuromorphic cameras to calculate a localized skeleton representation of the visitor. The neuromorphic chip runs the encoder part of the AI algorithm, which generates very sparse data and uses little energy. The visitor’s body movements as well as their localization and pose are tracked and control changes in soundscapes. The installation has a dual purpose. It exposes the internals of the neuromorphic brain’s activity in a pure yet not obvious visual feedback. It also revisits the body controlled sound stylistic exercise with a more direct dialogue with the machine and with richer soundscapes.

TONUS publication: Neuromorphic human pose estimation for artistic sound co-creation